Posted on Thu 26 Oct 2017

RAMCamp Kyoto

David gives us a summary of the goings on at RAMCamp 2017 in Kyoto.

Project

RAM Workshop

In 2016 dancers, artists, circus performers and choreographers experimented with RAM developed by YCAM from Japan. RAM uses motion capture sensors to create real-time visual feedback through virtual environments.In 2016, we hosted a two-day workshop which invited a number of performers, choreographers, and programmers to come and experiment with the Reactor for Awareness in Motion kit.

What is Reactor for Awareness in Motion…

RAM (for short) is a low cost, open source motion capture system. Developed by the YCAM in collaboration with Yoko Ando and the Forsythe Company, that allows programmers and performers to work together to create unique rehearsal environments (Scenes). The kit consists of two components, the Motioner which is a suit made of eighteen Inertial Measurement Unit (IMU) sensors. And the RAMDanceToolkit which is a software suite and code base for translating the movement data into visual data.

RAMCamp Kyoto

RAMCamp 2017 gathered programmers, dancers and choreographers from Japan, China, and South Korea to participate in a 5-day intensive workshop in Kyoto as part of the Kyoto Experiment. Myself and Laura Kriefman (Guerilla Dance Project) were invited as guest professors to assist the participants in the workshop and offer our advice and knowledge of RAM.

This is a summary of what happened and what was created as part of the RAMCamp.

After the introductions, the participants were split into groups to take part in an ideas generation game called World Cafe. Participants would move from table to table leaving one person behind to explain their ideas to the next group, who could either build upon or generate new ideas. And oh my were there some amazing concepts:

- Sea Urchin: where lines would extrude from the virtual avatar colliding with virtual objects which would be feedback to the performer.

- Body Warp: where one performer's limbs would be replaced by other performers at random.

Participants then divided themselves into teams around one of the ideas generated during the World Cafe. One stipulation was that each team was to have at least one dancer and one programmer.

To give the participants a break from ideas generation, they were shown the Perceptron Neuron Kit (which is a commercially available alternative to the Motioner) and taught how the system worked and how to set it up on their machines.

The YCAM team then demonstrated the RAMDanceToolkit and some of its prebuilt scenes.

On Day 2 participants were given a Dance Workshop by Kenta Kojiri that helped both dancers and programmers understand how they should communicate their ideas in the context of generating scenes.

Motoi Shimizu then gave an introductory coding workshop, which taught those who were interested the fundamentals of programming with openFrameworks*.

Whilst the teams were working towards making their scenes for the showing. Laura and I had some to time to work on our own scenes.

We experimented with Moving Gesture Recognition where, if a performer were to move in a certain way it could trigger some interactive content. This was a promising idea but there wasn’t enough time to fully implement the learning and the actions in the scene, so we created two different scenes.

The first scene called Flicker monitored the position of specific joints, as the orientation of the joint changed our scene triggered audio cues inside Ableton. We then fed this audio into Laura’s Kicking the Mic setup. For those who don’t know Kicking the Mic. It is an audio responsive LED dress that responds to tap dance. As the audio goes through the system it triggers colour animations onto the LED dress.

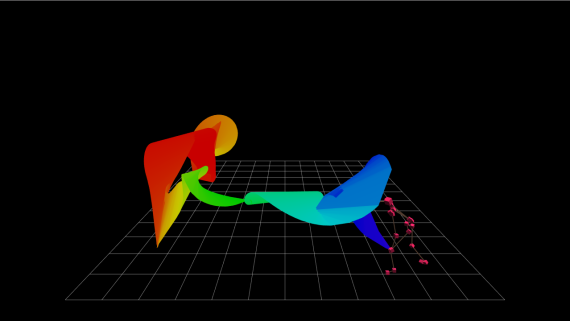

Our second scene called Colour Thread, attached threads to specified limbs as the dancer moved around the scene these threads connected and became a coloured mesh which stayed in the space for 20 seconds. If the performer walked through this mesh LED dress would respond by showing the colour from the scene. The idea behind this scene was to show performers where their previous movements were using the LED’s instead of a screen.

On Day 3 the groups were encouraged to share their ideas and scenes with the rest of the groups.

On the final day, each group gave a series of short performances which used their built scenes (most are untitled).

Group 1 created two scenes. The first scene used the rotation and position of the performer's arms and shoulders to affect the pitch and modulation of an audible sine wave. While individual characters were drawn on to the virtual avatars joints.

The second scene, used the simple lines emitting from each of the performer's joints, either colliding with the ground or the other performer.

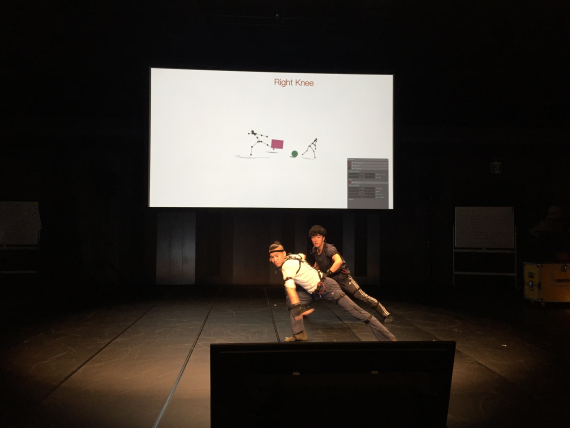

Group 2 again managed to create two scenes. The first used three performers, two in the perceptron neutron suits and the third without. The unsuited performer would attempt to mimicked certain body parts of the suited performers. On screen, the virtual avatars were attempting to control a sphere, where one performer controlled the rotation and the other controlled its position.

Their second scene was fantastic, in essence, it was a 3D version of Twister. Performers improvised on stage until a virtual box appeared on screen, they would then move to put the randomised limb/joint into the virtual box.

Group 3 made three unique scenes. The first aptly called Spiderman, allowed the performer to throw lines across the virtual space, creating smaller unique spaces to navigate.

The second, called Visual Studio. Generated 3D geometry at the center of the virtual space. As the performer moved around the space the geometry would expand, rotate and warp.

Their third scene called PaperBird, was exactly that a floating paper airplane in the virtual space. A limb was selected at random which would then affect the flight path of the plane.

The fourth group created two scenes, the first called Petal, generated virtual petals onto the floor which the reacted to the performer's movements.

The second scene called Orbit generated musical orbs which orbited the performer. The quicker they moved the more orbs were generated, as the performer moved through the space and made contact with the orbs musical notes play making the performer aware of his/her position.

It is an extremely exciting prospect that in a relatively such a short space of time with the added complexity of three to four languages being translated, these incredible scenes were created. To end here are three things I have learnt over the past 5 days:

- Try things differently: In Kenta's performance workshop programmers were asked to dance, give rationale and try to think of creative responses to their movements. Likewise, the performers were asked to think like programmers, how might I make this happen what might be the limitations etc. It's a refreshing reminder that we all need to take one side step sometimes to progress forward.

- Be the Zero: Many moons ago (7 years I checked) I asked a specific user on a forum for some help, which he did gladly. I thanked him and moved on to other projects. For the user (James Kong) to be in the workshop as a participant, it was a wonderfully humbling moment. We were a room with some of the most talented and incredibly gifted performers, coders and choreographers, yet we were there as professors. Chris Hadfield talks about this in his book. Never try to be the plus 1. Be the listener, be humble, be the zero.

- Say yes: Most of the scenes created at this workshop came from people saying yes. From an inkling of an idea can come an incredible project.