Studio blog

Posted on Fri 4 Oct 2024

Patterns in Practice Artist Residency Reflections

Craig Scott on the chaos of improvising with an 'intelligent' guitar

Credit: Shamphat Photography

Posted by

Victoria Tillotson

Victoria (she/her) is Watershed's Head of Talent Development, supporting artists and producers to access new ways of working, build strong collaborative networks and community.Hosted by

Craig Scott

Performer, composer and creative technologist Craig Scott creates sound works for human and non-human performers.Craig Scott is a composer, improvising guitarist and sound artist. He joined Pervasive Media Studio to undertake our Patterns in Practice Artist Residency - an opportunity to develop ideas and conversations exploring data mining and machine learning.

Working with human performers, robotics, custom built digital and analogue hardware, Craig's work is wildly inventive and reflects on the tension that exists between human and machine-made music. He brought this practice to the Residency, which was part of a wider Patterns in Practice research project led by the University of Sheffield and UWE Bristol, funded by UKRI. The project explored how human beliefs, values and feelings affect how we engage with data mining (the practice of analysing large databases in order to generate new information) and machine learning (computer systems that are able to learn and adapt without following explicit instructions, by using algorithms and statistical models to analyse and draw inferences from patterns in data). The residency - which drew to a close earlier in the year - was an opportunity for Craig to delve into the research and develop artwork in response. We’re delighted to share Craig’s reflections on his time with us.

Craig Scott says:

The Patterns in Practice Residency was a great opportunity to explore an idea I had been thinking about, and tinkering with, for a little while – the creation of an improvised human/machine duet with a semi-automated electric guitar. I am largely self-taught. I believe self-sufficiency is very important, which in the past, has led me to build my own home studio, my own bespoke instruments and self-record, produce, release and promote my own work. Through relentless persistence, this has led to the showcasing of my live and recorded work in the UK, Poland, the Netherlands and the USA, and in 2023 I was nominated for the prestigious Paul Hamlyn Composer Awards.

I taught myself electronics initially to make my own recording equipment (due to financial restraints) which has more recently led me (via various rabbit holes) to building automated and semi-automated acoustic instruments designed to be played in collaboration with improvising musicians, often using recycled or discarded materials.

These are:

- My semi-automated electric guitar;

- In collaboration with artist Hollie Miller, a wearable instrument that explores the collection of human bio data to manipulate sound and light, creating a realtime responsive performance environment;

- And my medio-core ineptronica recording project focused on digitally animating acoustic instruments.

Whilst I value the freedom and DIY ethic at the heart of my practice, I was eager to undertake the Patterns in Practice Residency, which offered a rare opportunity to work with Machine Learning (ML) and Artificial Intelligence (AI) specialists and absorb the research learnings within Patterns in Practice into my own work. It has been an extremely valuable and fruitful collaboration, enabling me to create a performance ready instrument, grow new areas of my practice and make valuable connections within Watershed’s Pervasive Media Studio community.

Credit: Jack Offord

I initially called my project ‘Improvised Human Machine Conversations - Instrumental Extensions and Amputations’, which encapsulated everything I was thinking about at the time. The first part of the title is self-explanatory - it's me improvising on an instrument that will ‘answer back’ to me through playing itself. The second part comes from Marshall McLuhan's idea that every technological extension is also an amputation. So the idea that whatever you give over to technology to control, over time, you will eventually lose the ability to manipulate yourself. This taps into my broader work and ideas, which reflect our evolving human relationships with technological appendages, the effect these relationships have on personal/collective mental state, perception of reality and how we relate to our own and each others bodies through our tools.

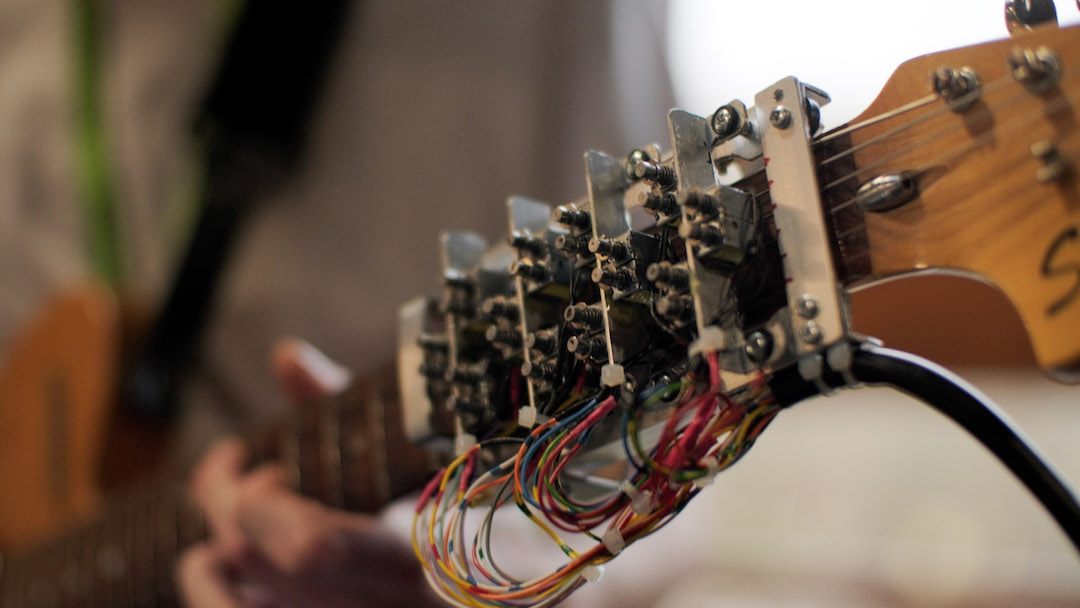

The prototype instrument I created during the residency, is an electric guitar that a human and a machine simultaneously duet on. The guitar already had a bank of digitally controlled solenoids installed on the bridge, something similar to piano hammers, that strike individual strings (allowing the computer to contribute rhythmically).

Credit: Shamphat Photography

For this residency I built a second appendage for the fretboard of the guitar, this enables the computer to control the bottom two and a bit octaves and then everything in between is free for the human musician to play. This allows machine learning algorithm (or AI – if you insist!) to contribute melodically / harmonically.

Credit: Shamphat Photography

During the residency I have been working towards being able to improvise in a note for note exchange with the ML/AI algorithm. This very short feedback loop between human input and computer response allows a much more conversational and malleable exchange than other music ML/AI systems I have experimented with.

My aim has been to completely recontextualise a very familiar instrument, forcing the breaking of habits, mutation of familiar patterns and opening up new territories.

I'm quite drawn to the chaos of it. Up until recently, I thought about digital control as precise, exacting repetition. But this research has uncovered something that feels less exact and much more chaotic and closer to the kind of density of our surrounding digital world - at its heart it is all very precise, but there's so much of it happening, on so many levels at once, that it becomes a very chaotic yet tactile environment to be a part of. I’ve also long held a fascination with the uncanny juxtaposition of exact digital repetition/replication vs. the inconsistencies of the acoustic world and human performance. My research goes some way toward my understanding of that uncanniness.

Now that the residency has drawn to a close, I’m reflecting on my starting point and considering changing the name of the work. I’m interested in how technologies have influenced/dictated the evolution of music both in its creation and how we listen. From the linearity of classical music in its relation to the printed word / printing press to the cyclical repetition and immersive environment of digital dance musics in relation to the recursive loop of digital code. So I’m interested in what becomes possible in terms of sound creation/perception due to new technologies, what is lost, and what is gained from this tussle.

I'm also interested it how the content of the previous technological environments are re-presented and mutated as they are assimilated into the new – this feels more self evident with the adoption of ML/AI as they must be fed data/content from previous eras/environments in order to function.

I have decided to change the title of the project to 'Craig Against the Machine' , whilst being a playful nod to my teenage musical tastes I think it also encapsulates our current societal relationship with AI as 'big brother/other' and the idea that musically this is somewhere between dueting and fighting for control over the instrument with the ML/AI system.

Credit: Shamphat Photography

Through improvising in conversation with instrument I have found it very interesting how much I found myself projecting meaning and assuming intent into the ML/AI responses – similar to the way in which when interacting with a chat-bot it is easy to project onto the AI assuming it is truly understanding the conversation and generating original thoughts/responses.

In order to co-create with such a system it required me to constantly re-contextualise how I was perceiving the ground (harmonic/rhythmic framework) of my contribution to the duet – I found in this process the ML/AI became a valuable mercurial reflective surface to interrogate my own perceptual assumptions.

I have gained so many things from this Residency – a whole new community, ongoing support, professional development and a better understanding of folding academic research into my practice. But perhaps one of the most exciting things for me is that it has re-framed my relationship with the automated instrument design and opened up a lot of new potentials.

Going forwards I would like to continue to develop the instrument, further explore improvising human/machine duets and how it mutates my own musical language and try expanding the concept to a group improvised context with other human musicians. Perhaps a duo to begin with, both improvising on instruments that are partially automated by machine learning to observe how human-to-human conversation is mutated through the mediation of these technologies.

I also want to further explore the feedback loops or folding between machines made to emulate humans and human performers emulating machines. ‘We shape our tools and thereafter they shape us’ (John M. Culkin in an article about McLuhan’s ideas, 1967). And of course, I hope to get the work I have created thus far out there, to perform and host conversations, sharing the ideas it encapsulates with future audiences.

If you would like to learn more about this project:

Tune into the Patterns in Practice Podcast

Watch a Pervasive Media Studio Lunchtime Talk

Explore the project webpage

And if you are interested to know more about the wider Patterns in Practice research project, you can read the interim report

Patterns in Practice is led by the University of Sheffield and UWE Bristol in partnership with Watershed, funded by UKRI. It seeks to understand the cultures of machine learning in science, education, and the arts.

![]()

![]()