Posted on Fri 12 Apr 2024

Testing Some Approaches to Producing a Vocal System.

This post reflects on some of the practical skills I developed and processes I used during the winter residency in the PMS at Watershed.

Posted by

Dave Evans

Dave currently works with sound and livestreaming and is exploring how objects and spaces can be given voice. His work reframes the livestream, usually associated with efficiency or entertainment, as an expressive opportunity in an attempt to tackle the complex problem of hierarchies of social and…Project

Investigating a Domestic Vocal System

This project asks if domestic space can be considered as a vocal system, and if so, what sort of sounds would it make?As I mentioned in my previous post, I have been broadcasting the sounds and noises of my home and wanted to the use the residency to test if these could be considered otherwise, maybe as voice. At the beginning of the residency, I asked myself how can sound or noise be elevated to the quality of voice? I began by using some pre-built effects that are specifically made to emulate voice. I bought a TC Helicon Talkbox Synth vocoder and wondered what it would sound like if, for instance I run the sound of the washing machine through it? What became apparent quickly is that vocoders mix two signals, an instrument signal and an existing voice signal, and the two are mixed to produce a third signal. Not having an actual voice to use (and not wanting to use my own), I had to improvise and used another device, I tried a number of things, but settled on using the vibrations of our fridge. What was produced was nothing like a voice, at all, but was just a strange, blended sound that was neither object. There were, perhaps, some bursts of speech-like muttering, but that’s probably still a stretch. I’m not sure what I was expecting really, but what I did like was that these two devices were part of a system that produced a noise, that they modulated one another, just like how the lungs, larynx, tongue etc, work together to produce voice in the human. Playing with this effect was really hit and miss, lots of the sounds produced by devices are not clearly notes that can be transposed onto one another. I did have a brief experiment feeding in the vocal track from a song ('Lord of Light' by Hawkwind, inspired by a Roger Zelazny book of the same name from 1968), and having it modulated along with the sound of the fridge, which also sounded quite strange, but I didn’t find this very interesting. If our house is going to sing a song, it probably won’t be Hawkwind.

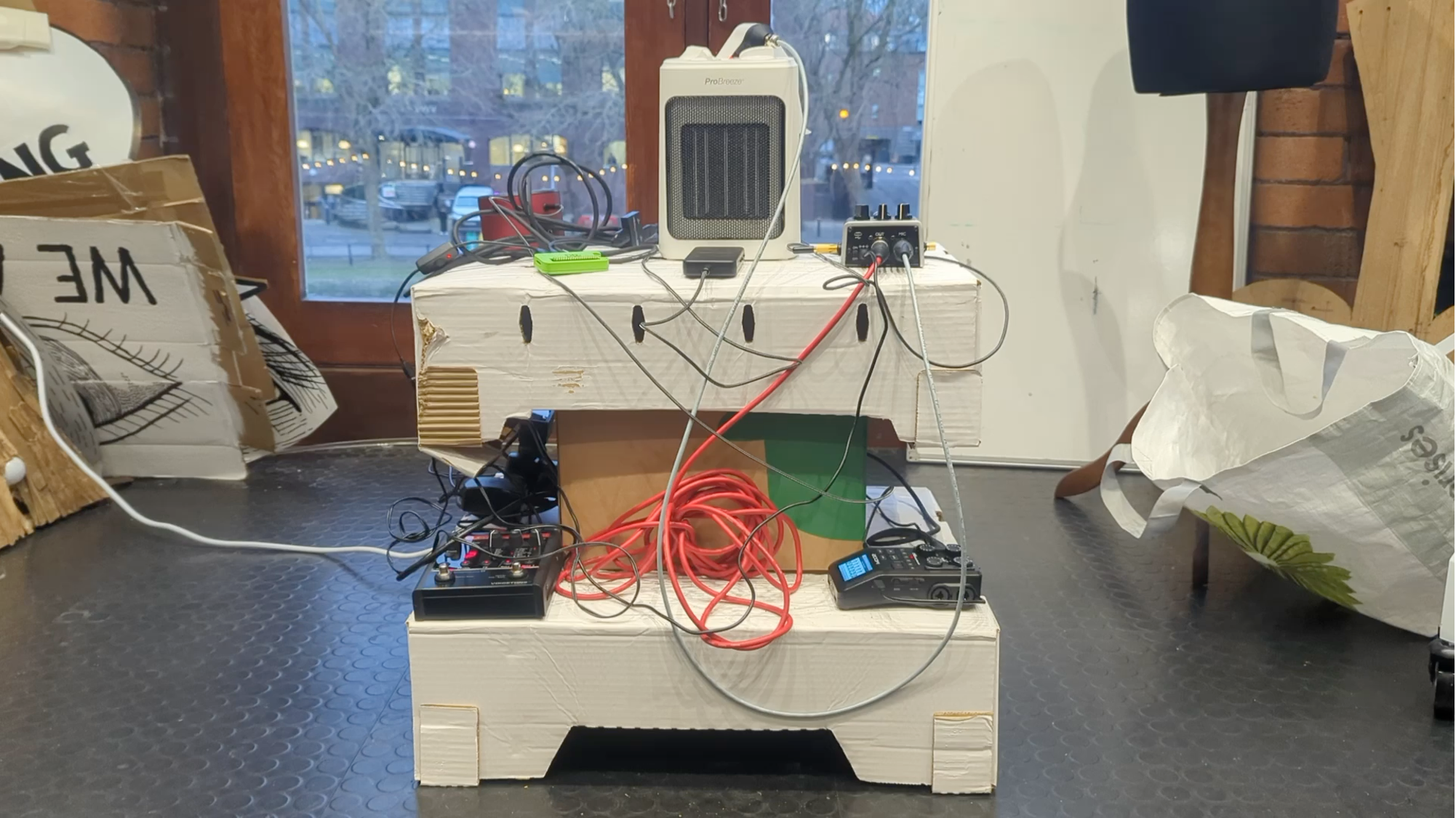

Continuing in this way, using prebuilt effects, I bought a TC Helicon Harmony-G XT. I wanted to explore finding sound at home then using this to produce harmonies around this sound. The pedal allowed me to produce harmonic layers of the original sound as well as adding effects to produce richer sounds. One afternoon in the PMS maker space I attached both of these pedals to a LOM Geophone and SOMA Ether2 then attached these to a small portable fan, the ProBreezer, and had a play. The sound that came out was, again, far from voice-like, but was pleasingly rich and deep, with a lovely oscillating quality that I could have listened to all day. I attached a small amp and played it quietly into the space and had a couple of comments from studio users how nice it was. I might describe it as sonorous, which was definitely moving in the right direction. There is an image of the set-up below. The quality of harmony and oscillation produced something closer to singing than talking, which makes more sense in the context of giving the house a voice. It is obviously never going to talk, but maybe it can sing? Singing has been fruitful to think about and work with as it doesn’t need to include language. I have been listening to Harold Budd’s ‘Pavilion of Dreams’ album from 1978, which includes minimal instrumentation and vocals but no language, it is expressive sound using the voice. Maybe this is more of the type of voice that the house might have?

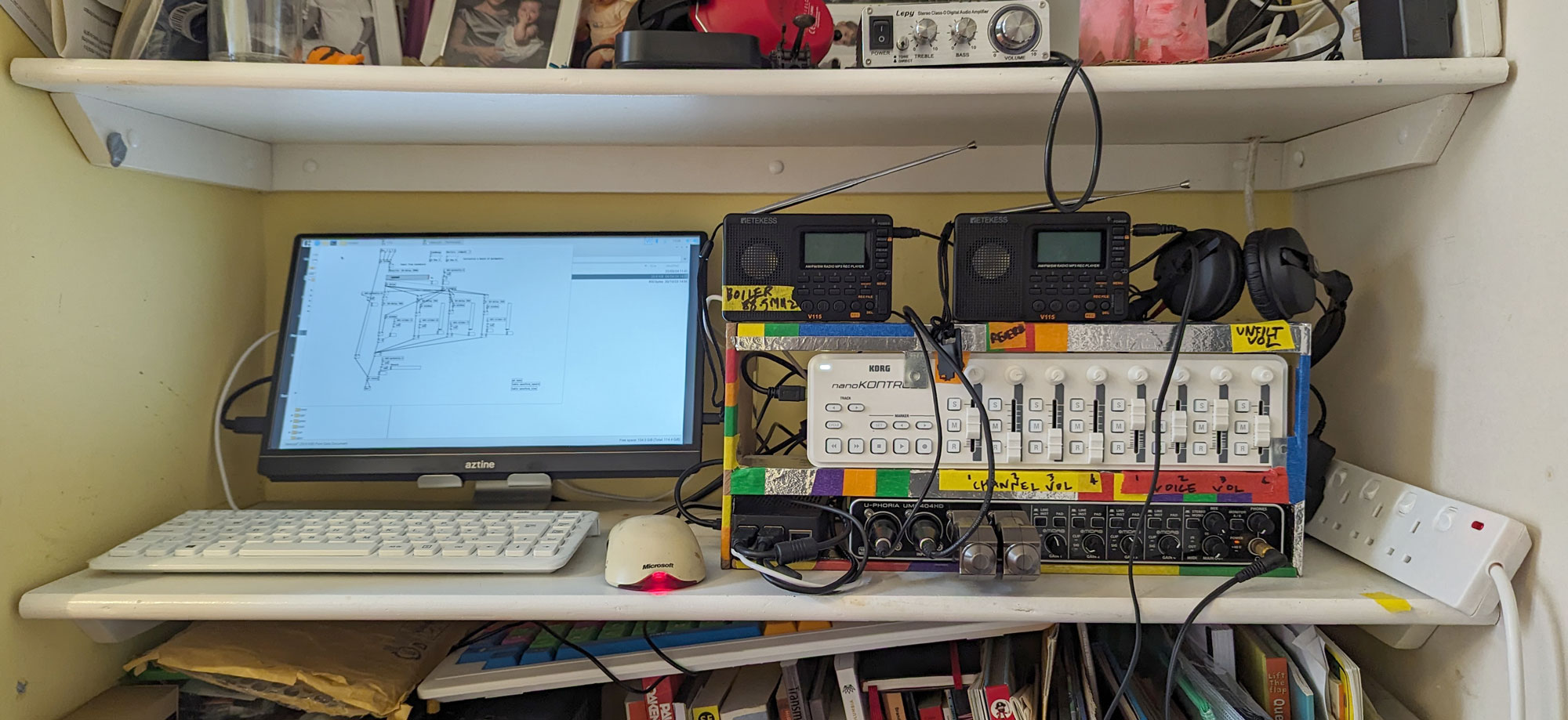

Twiddling knobs on two effects pedals was feeling quite limiting – so I wanted to do something that gave me more control and ability to harmonize and extend the sounds further. I had a couple of good chats in the PMS – about building my own digital effects, maybe using python or Pure Data. I tried working with Pure Data and, once I had done a few tutorials, was quite taken with it. Pure Data is an open-source visual programming language for creating interactive audio works on computer. It has been around since the 1990’s and there is a large community of users and preexisting patches to tinker with. Using a Raspberry Pi and a Korg NanoKontrol2 I had managed to build my own custom effects unit that could take four inputs from different places, add an array of harmonies and effects and broadcast the result to the web.

There were a few hiccups along the way, having to update the kernel on Raspberry Pi 4 to remove unwanted choppy audio, wasting days trying to integrate Darkice before discovering Liquidsoap to output to web radio, but it feels as if the results are moving in the right direction. I built a case for it all and decorated it with my kids, so it now looks like a proper ‘thing’ and sits proudly on a shelf at home. I also made some more considered sensing objects that send signals from places around the house to this ‘thing’. Again these are made up from sensors, radio transmitters devices, bits of tape etc. This one below sends the sounds of the electromagnetic field of the broadcast server, a raspberry pi, via low power FM signal, to the shelf above for streaming to the web.

That it is beginning to become a coherent system is quite important. Previously I had been getting stuff out, setting it up, making a tangle and doing a broadcast, then putting it all away again. My initial proposal for the residency was to look to models of vocal systems and try to map them more deliberately onto our house. There are many, but I have been taken with a basic model of the human vocal system as a connection of sub systems; the respiratory subsystem (the lungs, diaphragm, etc) which provides the ‘power’ and phonatory subsystem (the larynx etc) that provides the sound, and the articulatory subsystem (the teeth, lips, tongue etc) that shapes the sound. What has emerged during the residency is a series of subsystems that loosely reproduce this human system. The box on the shelf that uses Pure Data is a kind of articulatory system that shapes the sounds sent to it from around the house by the smaller sensors that might be considered loosely considered a phonatory subsystem, but instead of one voice box, there are now four. This is sort of where I want to be, and am still tinkering away to get this finished, time is limited, but I will post more soon!

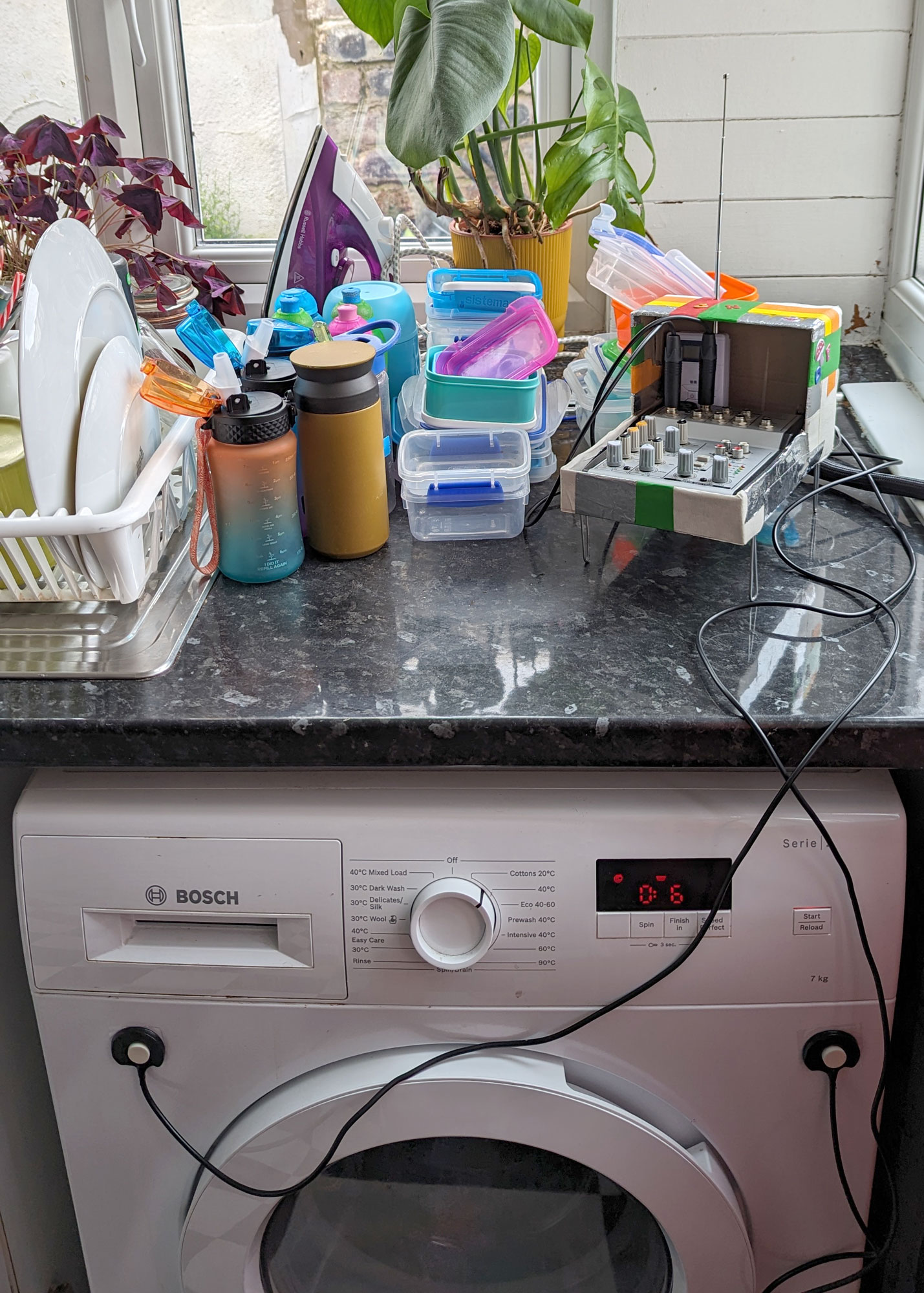

The box on the kitchen worktop, a speculative element of the 'phonatory' subsystem, receives signals from two contact mics attached to the washing machine and, again, using low power FM, transmits them to the 'articulatory' subsystem on the shelf for further 'shaping' and streaming.